How much does your framework choice affect performance? The answer may surprise you.

Authors’ Note: We’re using the word “framework” loosely to refer to platforms, micro-frameworks, and full-stack frameworks. We have our own personal favorites among these frameworks, but we’ve tried our best to give each a fair shot.

Show me the winners!

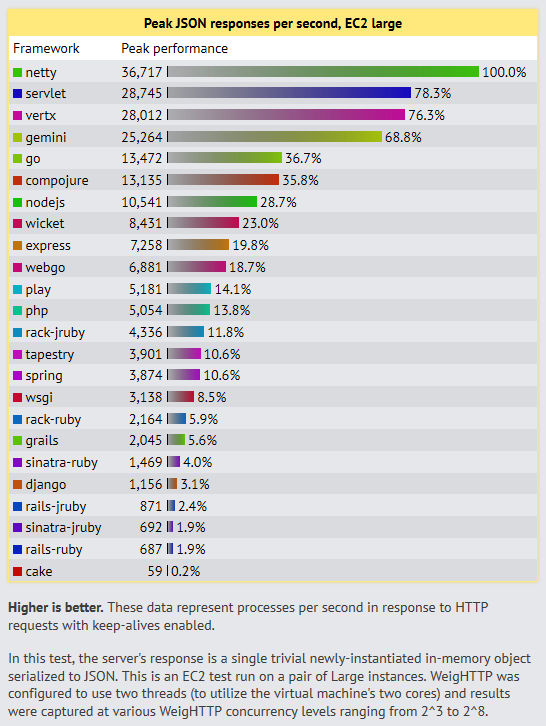

We know you’re curious (we were too!) so here is a chart of representative results.

Whoa! Netty, Vert.x, and Java servlets are fast, but we were surprised how much faster they are than Ruby, Django, and friends. Before we did the benchmarks, we were guessing there might be a 4x difference. But a 40x difference between Vert.x and Ruby on Rails is staggering. And let us simply draw the curtain of charity over the Cake PHP results.

If these results were surprising to you, too, then read on so we can share our methodology and other test results. Even better, maybe you can spot a place where we mistakenly hobbled a framework and we can improve the tests. We’ve done our best, but we are not experts in most of them so help is welcome!

Motivation

Among the many factors to consider when choosing a web development framework, raw performance is easy to objectively measure. Application performance can be directly mapped to hosting dollars, and for a start-up company in its infancy, hosting costs can be a pain point. Weak performance can also cause premature scale pain, user experience degradation, and associated penalties levied by search engines.

What if building an application on one framework meant that at the very best your hardware is suitable for one tenth as much load as it would be had you chosen a different framework? The differences aren’t always that extreme, but in some cases, they might be. It’s worth knowing what you’re getting into.

Simulating production environments

For this exercise, we aimed to configure every framework according to the best practices for production deployments gleaned from documentation and popular community opinion. Our goal is to approximate a sensible production deployment as accurately as possible. For each framework, we describe the configuration approach we’ve used and cite the sources recommending that configuration.

We want it all to be as transparent as possible, so we have posted our test suites on GitHub.

Results

We ran each test on EC2 and our i7 hardware. See Environment Details below for more information.

JSON serialization test

First up is plain JSON serialization on Amazon EC2 large instances. This is repeated in the introduction, above.

Dedicated hardware

Here is the same test on our Sandy Bridge i7 hardware.

Database access test (single query)

How many requests can be handled per second if each request is fetching a random record from a data store? Starting again with EC2.

Dedicated hardware

Database access test (multiple queries)

The following tests are all run at 256 concurrency and vary the number of database queries per request. The tests are 1, 5, 10, 15, and 20 queries per request. The 1-query samples, leftmost on the line charts, should be similar (within sampling error) of the single-query test above.

Dedicated hardware

How we designed the tests

This exercise aims to provide a “baseline” for performance across the variety of frameworks. By baseline we mean the starting point, from which any real-world application’s performance can only get worse. We aim to know the upper bound being set on an application’s performance per unit of hardware by each platform and framework.

But we also want to exercise some of the frameworks’ components such as its JSON serializer and data-store/database mapping. While each test boils down to a measurement of the number of requests per second that can be processed by a single server, we are exercising a sample of the components provided by modern frameworks, so we believe it’s a reasonable starting point.

For the data-connected test, we’ve deliberately constructed the tests to avoid any framework-provided caching layer. We want this test to require repeated requests to an external service (MySQL or MongoDB, for example) so that we exercise the framework’s data mapping code. Although we expect that the external service is itself caching the small number of rows our test consumes, the framework is not allowed to avoid the network transmission and data mapping portion of the work.

Not all frameworks provide components for all of the tests. For these situations, we attempted to select a popular best-of-breed option.

Each framework was tested using 2^3 to 2^8 (8, 16, 32, 64, 128, and 256) request concurrency. On EC2, WeigHTTP was configured to use two threads (one per core) and on our i7 hardware, it was configured to use eight threads (one per HT core). For each test, WeigHTTP simulated 100,000 HTTP requests with keep-alives enabled.

For each test, the framework was warmed up by running a full test prior to capturing performance numbers.

Finally, for each framework, we collected the framework’s best performance across the various concurrency levels for plotting as peak bar charts.

We used two machines for all tests, configured in the following roles:

- Application server. This machine is responsible for hosting the web application exclusively. Note, however, that when community best practices specified use of a web server in front of the application container, we had the web server installed on the same machine.

- Load client and database server. This machine is responsible for generating HTTP traffic to the application server using WeigHTTP and also for hosting the database server. In all of our tests, the database server (MySQL or MongoDB) used very little CPU time; and WeigHTTP was not starved of CPU resource. In the database tests, the network was being used to provide result sets to the application server and to provide HTTP responses in the opposite direction. However, even with the quickest frameworks, network utilization was lower in database tests than in the plain JSON tests, so this is unlikely to be a concern.

Ultimately, a three-machine configuration would dismiss the concern of double-duty for the second machine. However, we doubt that the results would be noticeably different.

The Tests

We ran three types of tests. Not all tests were run for all frameworks. See details below.

JSON serialization

For this test, each framework simply responds with the following object, encoded using the framework’s JSON serializer.

{"message" : "Hello, World!"}

With the content type set to application/json. If the framework provides no JSON serializer, a best-of-breed for the platform is selected. For example, on the Java platform, Jackson was used for frameworks that do not provide a serializer.

Database access (single query)

In this test, we use the ORM of choice for each framework to grab one simple object selected at random from a table containing 10,000 rows. We use the same JSON serialization tested earlier to serialize that object as JSON. Caveat: when the data store provides data as JSON in situ (such as with the MongoDB tests), no transcoding is done; the string of JSON is sent as-is.

As with JSON serialization, we’ve selected a best-of-breed ORM when the framework is agnostic. For example, we used Sequelize for the JavaScript MySQL tests.

We tested with MySQL for most frameworks, but where MongoDB is more conventional (as with node.js), we tested that instead or in addition. We also did some spot tests with PostgreSQL but have not yet captured any of those results in this effort. Preliminary results showed RPS performance about 25% lower than with MySQL. Since PostgreSQL is considered favorable from a durability perspective, we plan to include more PostgreSQL testing in the future.

Database access (multiple queries)

This test repeats the work of the single-query test with an adjustable queries-per-request parameter. Tests are run at 5, 10, 15, and 20 queries per request. Each query selects a random row from the same table exercised in the previous test with the resulting array then serialized to JSON as a response.

This test is intended to illustrate how all frameworks inevitably will converge to zero requests per second as the complexity of each request increases. Admittedly, especially at 20 queries per request, this particular test is unnaturally database heavy compared to real-world applications. Only grossly inefficient applications or uncommonly complex requests would make that many database queries per request.

Environment Details

|

Hardware

Load simulator

Databases

Ruby

JavaScript

PHP

|

Operating system

Web servers

Python

Go

Java / JVM

|

Notes

- For the database tests, any framework with the suffix “raw” in its name is using its platform’s raw database connectivity without an object-relational map (ORM) of any flavor. For example, servlet-raw is using raw JDBC. All frameworks without the “raw” suffix in their name are using either the framework-provided ORM or a best-of-breed for the platform (e.g., ActiveRecord).

Code examples

You can find the full source code for all of the tests on Github. Below are the relevant portions of the code to fetch a configurable number of random database records, serialize the list of records as JSON, and then send the JSON as an HTTP response.

Cake

public function index() {

$query_count = $this->request->query('queries');

if ($query_count == null) {

$query_count = 1;

}

$arr = array();

for ($i = 0; $i < $query_count; $i++) { $id = mt_rand(1, 10000); $world = $this->World->find('first', array('conditions' =>

array('id' => $id)));

$arr[] = array("id" => $world['World']['id'], "randomNumber" =>

$world['World']['randomNumber']);

}

$this->set('worlds', $arr);

$this->set('_serialize', array('worlds'));

}

Compojure

(defn get-world []

(let [id (inc (rand-int 9999))] ; Num between 1 and 10,000

(select world

(fields :id :randomNumber)

(where {:id id }))))

(defn run-queries [queries]

(vec ; Return as a vector

(flatten ; Make it a list of maps

(take

queries ; Number of queries to run

(repeatedly get

Django

def db(request):

queries = int(request.GET.get('queries', 1))

worlds = []

for i in range(queries):

worlds.append(World.objects.get(id=random.randint(1, 10000)))

return HttpResponse(serializers.serialize("json", worlds), mimetype="application/json")

Express

app.get('/mongoose', function(req, res) {

var queries = req.query.queries || 1,

worlds = [],

queryFunctions = [];

for (var i = 1; i <= queries; i++ ) {

queryFunctions.push(function(callback) {

MWorld.findOne({ id: (Math.floor(Math.random() * 10000) + 1 )})

.exec(function (err, world) {

worlds.push(world);

callback(null, 'success');

});

});

}

async.parallel(queryFunctions, function(err, results) {

res.send(worlds);

});

});

Gemini

@PathSegment

public boolean db() {

final Random random = ThreadLocalRandom.current();

final int queries = context().getInt("queries", 1, 1, 500);

final World[] worlds = new World[queries];

for (int i = 0; i < queries; i++) {

worlds[i] = store.get(World.class, random.nextInt(DB_ROWS) + 1);

}

return json(worlds);

}

Grails

def db() {

def random = ThreadLocalRandom.current()

def queries = params.queries ? params.int('queries') : 1

def worlds = []

for (int i = 0; i < queries; i++) {

worlds.add(World.read(random.nextInt(10000) + 1))

}

render worlds as JSON

}

Node.js

if (path === '/mongoose') {

var queries = 1;

var worlds = [];

var queryFunctions = [];

var values = url.parse(req.url, true);

if (values.query.queries) {

queries = values.query.queries;

}

res.writeHead(200, {'Content-Type': 'application/json; charset=UTF-8'});

for (var i = 1; i <= queries; i++) {

queryFunctions.push(function(callback) {

MWorld.findOne({ id: (Math.floor(Math.random() * 10000) + 1 )})

.exec(function (err, world) {

worlds.push(world);

callback(null, 'success');

});

});

}

async.parallel(queryFunctions, function(err, results) {

res.end(JSON.stringify(worlds));

PHP (Raw)

$query_count = 1;

if (!empty($_GET)) {

$query_count = $_GET["queries"];

}

$arr = array();

$statement = $pdo->prepare("SELECT * FROM World WHERE id = :id");

for ($i = 0; $i < $query_count; $i++) { $id = mt_rand(1, 10000); $statement->bindValue(':id', $id, PDO::PARAM_INT);

$statement->execute();

$row = $statement->fetch(PDO::FETCH_ASSOC);

$arr[] = array("id" => $id, "randomNumber" => $row['randomNumber']);

}

echo json_encode($arr);

PHP (ORM)

$query_count = 1;

if (!empty($_GET)) {

$query_count = $_GET["queries"];

}

$arr = array();

for ($i = 0; $i < $query_count; $i++) { $id = mt_rand(1, 10000); $world = World::find_by_id($id); $arr[] = $world->to_json();

}

echo json_encode($arr);

Play

public static Result db(Integer queries) {

final Random random = ThreadLocalRandom.current();

final World[] worlds = new World[queries];

for (int i = 0; i < queries; i++) {

worlds[i] = World.find.byId((long)(random.nextInt(DB_ROWS) + 1));

}

return ok(Json.toJson(worlds));

}

Rails

def db

queries = params[:queries] || 1

results = []

(1..queries.to_i).each do

results << World.find(Random.rand(10000) + 1) end render :json => results

Servlet

res.setHeader(HEADER_CONTENT_TYPE, CONTENT_TYPE_JSON);

final DataSource source = mysqlDataSource;

int count = 1;

try {

count = Integer.parseInt(req.getParameter("queries"));

} catch (NumberFormatException nfexc) {

// Handle exception

}

final World[] worlds = new World[count];

final Random random = ThreadLocalRandom.current();

try (Connection conn = source.getConnection()) {

try (PreparedStatement statement = conn.prepareStatement(

DB_QUERY,

ResultSet.TYPE_FORWARD_ONLY,

ResultSet.CONCUR_READ_ONLY)) {

for (int i = 0; i < count; i++) {

final int id = random.nextInt(DB_ROWS) + 1;

statement.setInt(1, id);

try (ResultSet results = statement.executeQuery()) {

if (results.next()) {

worlds[i] = new World(id, results.getInt("randomNumber"));

}

}

}

}

} catch (SQLException sqlex) {

System.err.println("SQL Exception: " + sqlex);

}

try {

mapper.writeValue(res.getOutputStream(), worlds);

} catch (IOException ioe) {

}

Sinatra

get '/db' do

queries = params[:queries] || 1

results = []

(1..queries.to_i).each do

results << World.find(Random.rand(10000) + 1)

end

results.to_json

end

Spring

@RequestMapping(value = "/db")

public Object index(HttpServletRequest request,

HttpServletResponse response, Integer queries) {

if (queries == null) {

queries = 1;

}

final World[] worlds = new World[queries];

final Random random = ThreadLocalRandom.current();

final Session session = HibernateUtil.getSessionFactory().openSession();

for(int i = 0; i < queries; i++) {

worlds[i] = (World)session.byId(World.class).load(random.nextInt(DB_ROWS) + 1);

}

session.close();

try {

new MappingJackson2HttpMessageConverter().write(

worlds, MediaType.APPLICATION_JSON,

new ServletServerHttpResponse(response));

} catch (IOException e) {

// Handle exception

}

return null;

}

Tapestry

StreamResponse onActivate() {

int queries = 1;

String qString = this.request.getParameter("queries");

if (qString != null) {

queries = Integer.parseInt(qString);

}

if (queries <= 0) {

queries = 1;

}

final World[] worlds = new World[queries];

final Random rand = ThreadLocalRandom.current();

for (int i = 0; i < queries; i++) {

worlds[i] = (World)session.get(World.class, new Integer(rand.nextInt(DB_ROWS) + 1));

}

String response = "";

try {

response = HelloDB.mapper.writeValueAsString(worlds);

} catch (IOException ex) {

// Handle exception

}

return new TextStreamResponse("application/json", response);

}

Vert.x

private void handleDb(final HttpServerRequest req) {

int queriesParam = 1;

try {

queriesParam = Integer.parseInt(req.params().get("queries"));

} catch(Exception e) {

}

final DbHandler dbh = new DbHandler(req, queriesParam);

final Random random = ThreadLocalRandom.current();

for (int i = 0; i < queriesParam; i++) {

this.getVertx().eventBus().send(

"hello.persistor",

new JsonObject()

.putString("action", "findone")

.putString("collection", "world")

.putObject("matcher", new JsonObject().putNumber("id",

(random.nextInt(10000) + 1))), dbh);

}

}

class DbHandler implements Handler<Message<JsonObject>> {

private final HttpServerRequest req;

private final int queries;

private final List<Object> worlds = new CopyOnWriteArrayList<>();

public DbHandler(HttpServerRequest request, int queriesParam) {

this.req = request;

this.queries = queriesParam;

}

@Override

public void handle(Message<JsonObject> reply) {

final JsonObject body = reply.body;

if ("ok".equals(body.getString("status"))) {

this.worlds.add(body.getObject("result"));

}

if (this.worlds.size() == this.queries) {

try {

final String result = mapper.writeValueAsString(worlds);

final int contentLength = result

.getBytes(StandardCharsets.UTF_8).length;

this.req.response.putHeader("Content-Type",

"application/json; charset=UTF-8");

this.req.response.putHeader("Content-Length", contentLength);

this.req.response.write(result);

this.req.response.end();

} catch (IOException e) {

req.response.statusCode = 500;

req.response.end();

}

}

}

}

Wicket

protected ResourceResponse newResourceResponse(Attributes attributes) {

final int queries = attributes.getRequest().getQueryParameters()

.getParameterValue("queries").toInt(1);

final World[] worlds = new World[queries];

final Random random = ThreadLocalRandom.current();

final ResourceResponse response = new ResourceResponse();

response.setContentType("application/json");

response.setWriteCallback(new WriteCallback() {

public void writeData(Attributes attributes) {

final Session session = HibernateUtil.getSessionFactory()

.openSession();

for (int i = 0; i < queries; i++) {

worlds[i] = (World)session.byId(World.class)

.load(random.nextInt(DB_ROWS) + 1);

}

session.close();

try {

attributes.getResponse().write(HelloDbResponse.mapper

.writeValueAsString(worlds));

} catch (IOException ex) {

}

}

});

return response;

}

Expected questions

We expect that you might have a bunch of questions. Here are some that we’re anticipating. But please contact us if you have a question we’re not dealing with here or just want to tell us we’re doing it wrong.

- “You configured framework x incorrectly, and that explains the numbers you’re seeing.” Whoops! Please let us know how we can fix it, or submit a Github pull request, so we can get it right.

- “Why WeigHTTP?” Although many web performance tests use ApacheBench from Apache to generate HTTP requests, we have opted to use WeigHTTP from the LigHTTP team. ApacheBench remains a single-threaded tool, meaning that for higher-performance test scenarios, ApacheBench itself is a limiting factor. WeigHTTP is essentially a multithreaded clone of ApacheBench. If you have a recommendation for an even better benchmarking tool, please let us know.

- “Doesn’t benchmarking on Amazon EC2 invalidate the results?” Our opinion is that doing so confirms precisely what we’re trying to test: performance of web applications within realistic production environments. Selecting EC2 as a platform also allows the tests to be readily verified by anyone interested in doing so. However, we’ve also executed tests on our Core i7 (Sandy Bridge) workstations running Ubuntu 12.04 as a non-virtualized sanity check. Doing so confirmed our suspicion that the ranked order and relative performance across frameworks is mostly consistent between EC2 and physical hardware. That is, while the EC2 instances were slower than the physical hardware, they were slower by roughly the same proportion across the spectrum of frameworks.

- “Why include this Gemini framework I’ve never heard of?” We have included our in-house Java web framework, Gemini, in our tests. We’ve done so because it’s of interest to us. You can consider it a stand-in for any relatively lightweight minimal-locking Java framework. While we’re proud of how it performs among the well-established field, this exercise is not about Gemini. We routinely use other frameworks on client projects and we want this data to inform our recommendations for new projects.

- “Why is JRuby performance all over the map?” During the evolution of this project, in some test runs, JRuby would slighly edge out traditional Ruby, and in some cases—with the same test code—the opposite would be true. We also don’t have an explanation for the weak performance of Sinatra on JRuby, which is no better than Rails. Ultimately we’re not sure about the discrepancy. Hopefully an expert in JRuby can help us here.

- “Framework X has in-memory caching, why don’t you use that?” In-memory caching, as provided by Gemini and some other frameworks, yields higher performance than repeatedly hitting a database, but isn’t available in all frameworks, so we omitted in-memory caching from these tests.

- “What about other caching approaches, then?” Remote-memory or near-memory caching, as provided by Memcached and similar solutions, also improves performance and we would like to conduct future tests simulating a more expensive query operation versus Memcached. However, curiously, in spot tests, some frameworks paired with Memcached were conspicuously slower than other frameworks directly querying the authoritative MySQL database (recognizing, of course, that MySQL had its entire data-set in its own memory cache). For simple “get row ID n” and “get all rows” style fetches, a fast framework paired with MySQL may be faster and easier to work with versus a slow framework paired with Memcached.

- “Do all the database tests use connection pooling?” Sadly Django provides no connection pooling and in fact closes and re-opens a connection for every request. All the other tests use pooling.

- “What is Resin? Why aren’t you using Tomcat for the Java frameworks?” Resin is a Java application server. The GPL version that we used for our tests is a relatively lightweight Servlet container. Although we recommend Caucho Resin for Java deployments, in our tests, we found Tomcat to be easier to configure. We ultimately dropped Tomcat from our tests because Resin was slightly faster across all frameworks.

- “Why don’t you test framework X?” We’d love to, if we can find the time. Even better, craft the test yourself and submit a Github pull request so we can get it in there faster!

- “Why doesn’t your test include more substantial algorithmic work, or building an HTML response with a server-side template?” Great suggestion. We hope to in the future!

- “Why are you using a (slightly) old version of framework X?” It’s nothing personal! We tried to keep everything fully up-to-date, but with so many frameworks it became a never-ending game of whack-a-mole. If you think an update will affect the results, please let us know (or submit a Github pull request) and we’ll get it updated!

Conclusion

Let go of your technology prejudices.

We think it is important to know about as many good tools as possible to help make the best choices you can. Hopefully we’ve helped with one aspect of that.

Thanks for sticking with us through all of this! We had fun putting these tests together, and experienced some genuine surprises with the results. Hopefully others find it interesting too. Please let us know what you think or submit Github pull requests to help us out.

About TechEmpower

We provide web and mobile application development services and are passionate about application performance. Read more about what we do.