Fashion is having its moment in the metaverse.

A riot of luxury labels, music, and games are vying for attention in the virtual world. And as physical events and the entertainment industry that depends on them shuts down, virtual things have come to epitomize the popular culture of the pandemic.

It’s creating an environment where imagination and technical ability, not wealth, are the only barriers to accumulating the status symbols that only money and fame could buy.

Whether it’s famous designers like Marc Jacobs, Sandy Liang, or Valentino dropping styles in Nintendo’s breakout hit, Animal Crossing: New Horizons; HypeBae’s plans to host a fashion show later this month in the game; or various crossovers between Epic Games’ Fortnite and brands like Supreme (which pre-date the pandemic), fashion is tapping into gaming culture to maintain its relevance.

One entrepreneur who’s spent time on both sides of the business as a startup founder and an employee for one of the biggest brands in athletic wear has launched a new app to try build a bridge between the physical and virtual fashion worlds.

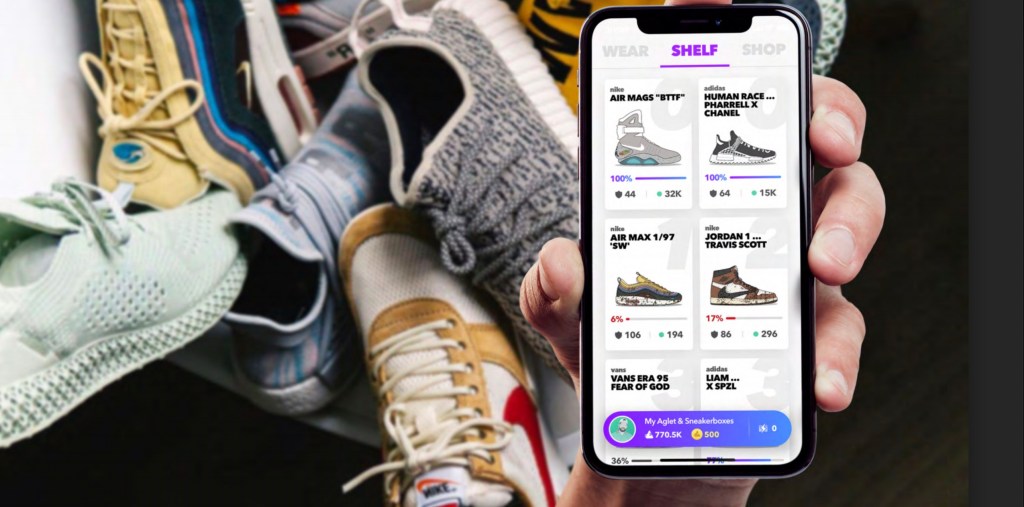

Its goal is to give hypebeasts a chance to collect virtual versions of their physical objects of desire and win points to maybe buy the gear they crave, while also providing a showcase where brands can discover new design talent to make the next generation of cult collaborations and launch careers.

https://www.instagram.com/p/B_szSLRDdW3/

Aglet’s Phase 1

The app, called Aglet, was created by Ryan Mullins, the former head of digital innovation strategy for Adidas, and it’s offering a way to collect virtual versions of limited edition sneakers and, eventually, design tools so all the would-be Virgil Ablohs and Kanye Wests of the world can make their own shoes for the metaverse.

When TechCrunch spoke with Mullins last month, he was still stuck in Germany. His plans for the company’s launch, along with his own planned relocation to Los Angeles, had changed dramatically since travel was put on hold and nations entered lockdown to stop the spread of COVID-19.

Initially, the app was intended to be a Pokemon Go for sneakerheads. Limited edition “drops” of virtual sneakers would happen at locations around a city and players could go to those spots and add the virtual sneakers to their collection. Players earned points for traveling to various spots, and those points could be redeemed for in-app purchases or discounts at stores.

“We’re converting your physical activity into a virtual currency that you can spend in stores to buy new brands,” Mulins said. “Brands can have challenges and you have to complete two or three challenges in your city as you compete on that challenge the winner will get prizes.”

Aglet determines how many points a player earns based on the virtual shoes they choose to wear on their expeditions. The app offers a range of virtual sneakers from Air Force 1s to Yeezys and the more expensive or rare the shoe, the more points a player earns for “stepping out” in it. Over time, shoes will wear out and need to replaced — ideally driving more stickiness for the app.

Currency for in-app purchases can be bought for anywhere from $1 (for 5 “Aglets”) to $80 (for 1,000 “Aglets”). As players collect shoes they can display them on their in-app virtual shelves and potentially trade them with other players.

When the lockdowns and shelter-in-place orders came through, Mullins and his designers quickly shifted to create the “pandemic mode” for the game, where users can go anywhere on a map and simulate the game.

“Our plan was to have an LA specific release and do a competition, but that was obviously thrown off,” Mullins said.

The app has antecedents like Nike’s SNKRS, which offered limited edition drops to users and geo-located places where folks could find shoes from its various collaborations, as Input noted when it covered Aglet’s April launch.

While Mullins’ vision for Aglet’s current incarnation is an interesting attempt to weave the threads of gaming and sneaker culture into a new kind of augmented reality-enabled shopping experience, there’s a step beyond the game universes that Mullins wants to create.

Image Credits: Adidas (opens in a new window)

The future of fashion discovery could be in the metaverse

“My proudest initiative [at Adidas] was one called MakerLab,” said Mullins.

MakerLab linked Adidas up with young, up-and-coming designers and let them create limited edition designs for the shoe company based on one of its classic shoe silhouettes. Mullins thinks that those types of collaborations point the way to a potential future for the industry that could be incredibly compelling.

“The real vision for me is that I believe that the next Nike is an inverted Nike,” Mullins said. “I think what’s going to happen is that you’re going to have young kids on Roblox designing stuff in the virtual environments and it’ll pop there and you’ll have Nike or Adidas manufacture it.”

From that perspective, the Aglet app is more of a Trojan Horse for the big idea that Mullins wants to pursue. That’s to create a design studio to showcase the best virtual designs and bring them to the real world.

Mullins calls it the “Smart Aglet Sneaker Studio”. “[It’s] where you can design your own sneakers in the standard design style and we’ll put those in the game. We’ll let you design your own hoodies and then [Aglet] does become a YouTube for fashion design.”

The YouTube example comes from the starmaking power the platform has enabled for everyone from makeup artists to musicians like Justin Bieber, who was discovered on the social media streaming service.

“I want to build a virtual design platform where kids can build their own brands for virtual fashion brands and put them into this game environment that I’m building in the first phase,” said Mullins. “Once Bieber was discovered, YouTube meant he was being able to access an entire infrastructure to become a star. What Nike and Adidas are doing is something similar where they’re finding this talent out there and giving that designer access to their infrastructure and maybe could jumpstart a young kid’s career.”

Comment